微信扫码

杂志社动态

编委动态

会议信息

本期推荐

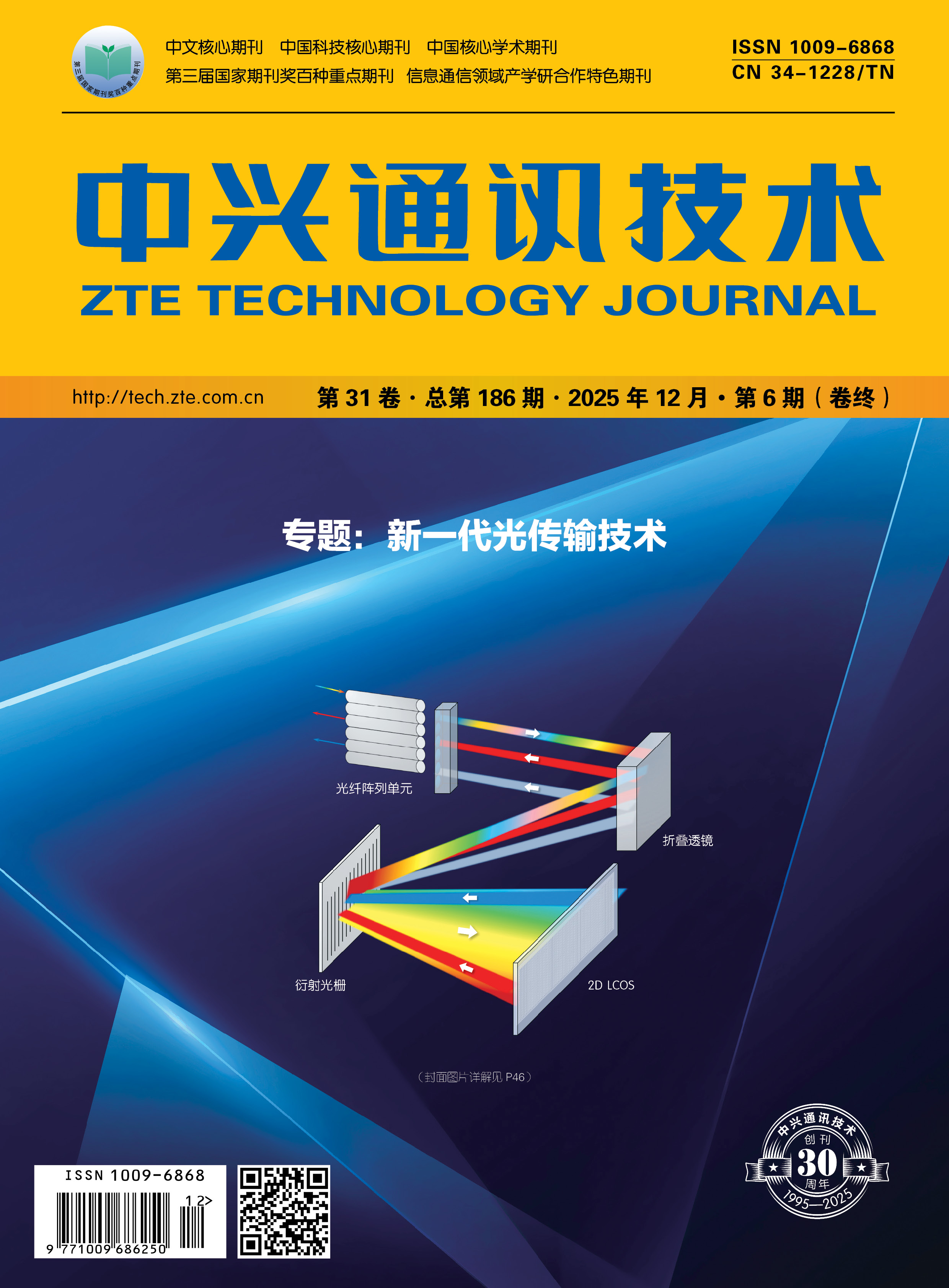

基于低复杂度Transformer的光纤信道快速精确建模技术

作者:史明辉,郑智雄,牛泽坤,义理林

[简介] 光纤信道建模对于表征光纤特性、开发先进数字信号处理算法十分重要。基于物理模型的分步傅里叶算法(SSFM)需要大量迭代运算,复杂度较高,限制了其应用前景。提出了一种基于低复杂度Transformer架构的光纤信道建模方法。在传统Transformer架构的基础上,我优化绝对位置编码为相对位置编码,优化全局注意力机制为滑动窗口注意力机制,进一步增强模型对光纤非线性特征的建模效果。结果表明,所提出的方法与SSFM之间的有效信噪比(ESNR)误差仅有0.15 dB,计算时间相比传统Transformer降低69.9%,相比SSFM降低96.9%,验证了其具备较高的精度且计算复杂度大幅降低。

关于AI原生的几点探讨

作者:何宝宏

[简介]人工智能的应用模式主要分为两类:一是助力传统产业的升级改造,实现存量系统的智能化;二是依托智能原生催生出新技术、新产品和新服务,构建全新的智能系统。智能原生的概念虽已被广泛接受,但相关探索仍处于相对初级阶段,其理论内涵还不够丰富。本文从技术、业务和人三个角度,探讨对智能原生的观察和思考,并展望其发展趋势,希望能对AI的应用落地和模式创新起到一定的参考作用。础设施高质量发展。

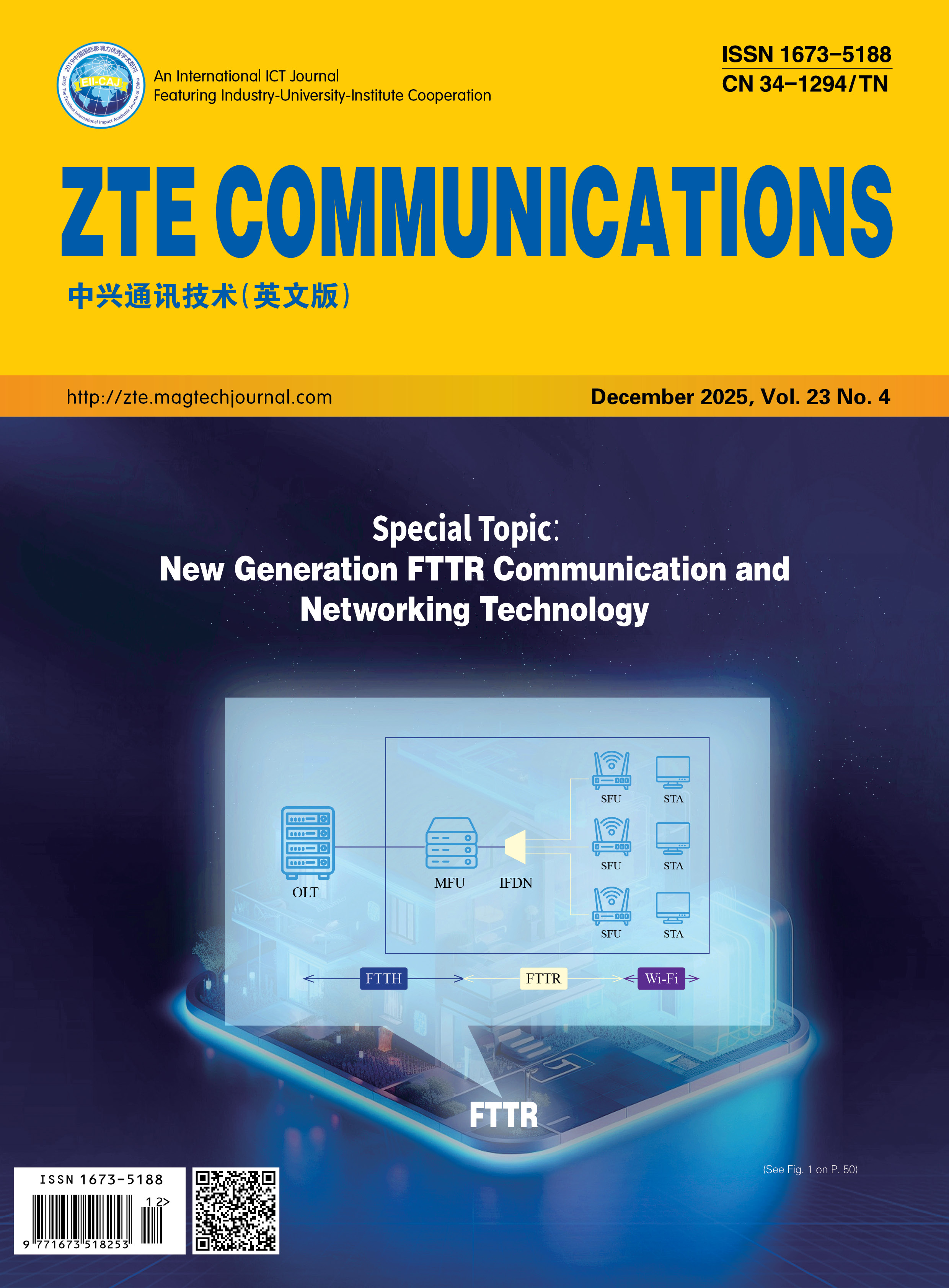

FTTR-MmWave Architecture for Next-Generation Indoor High-Speed Communications

CHEN Zhe, ZHOU Peigen, WANG Long, HOU Debin, HU Yun, CHEN Jixin, HONG Wei

[Introduction] Millimeter-wave (mmWave) technology has been extensively studied for indoor short-range communications. In such fixed network applications, the emerging FTTR architecture allows mmWave technology to be well cascaded with in-room optical network terminals, supporting high-speed communication at rates over tens of Gb/s. In this FTTR-mmWave system, the severe signal attenuation over distance and high penetration loss through room walls are no longer bottlenecks for practical mmWave deployment. Instead, these properties create high spatial isolation, which prevents mutual interference between data streams and ensures information security. This paper surveys the promising integration of Fiber-to-the-Room (FTTR) and millimeter-wave (mmWave) access for next-generation indoor high-speed communications, with a particular focus on the Ultra-Converged Access Network (U-CAN) architecture. It is structured in two main parts: it first traces this new FTTR-mmWave architecture from the perspective of Wi-Fi and mmWave communication evolution, and then focuses specifically on the development of key mmWave chipsets for FTTR-mmW Wi-Fi applications. This work aims to provide a comprehensive reference for researchers working toward immersive, untethered indoor wireless experiences for users.

Empowering Grounding DINO with MoE: An End-to-End Framework for Cross-Domain Few-Shot Object Detection

DONG Xiugang, ZHANG Kaijin, NONG Qingpeng, JU Minhan, TU Yaofeng

[Introduction]Open-set object detectors, as exemplified by Grounding DINO, have attracted significant attention due to their remarkable performance on in-domain datasets like Common Objects in Context (COCO) after only few-shot fine-tuning. However, their generalization capabilities in cross-domain scenarios remain substantially inferior to their in-domain few-shot performance. Prior work on fine-tuning Grounding DINO for cross-domain few-shot object detection has primarily focused on data augmentation, leaving broader systemic optimizations unexplored. To bridge this gap, we propose a comprehensive end-to-end fine-tuning framework specifically designed to optimize Grounding DINO for cross-domain few-shot scenarios. In addition, we propose Mixture-of-Experts (MoE)-Grounding DINO, a novel architecture that integrates the MoE architecture to enhance adaptability in cross-domain settings. Our approach demonstrates a significant 15.4 Mean Average Precision (mAP) improvement over the Grounding DINO baseline on the Roboflow20-VL benchmark, establishing a new state of the art for cross-domain few-shot object detection (CD-FSOD). The source code and models will be made available upon publication.

最新刊物

《中兴通讯技术》投审稿平台

友情链接